Spending hours copying and pasting information between websites and spreadsheets? You’re not alone. Web data entry consumes valuable time that could be better spent on strategic tasks that grow your business.

Manual data entry isn’t just tedious—it’s error-prone and expensive. Studies show that businesses lose up to 12% of their revenue due to poor data quality, much of which stems from human error during manual entry processes.

The good news? You can automate web data entry using the right tools and techniques. This guide will show you practical methods to streamline your data collection, reduce errors, and free up time for more important work.

Understanding Web Data Entry Automation

Web data entry automation involves using software tools to extract, process, and input data from websites without manual intervention. Instead of manually copying information from web pages into databases or spreadsheets, automation tools handle these tasks programmatically.

This process typically involves three key components:

Data Extraction: Software identifies and pulls specific information from web pages, such as product prices, contact details, or inventory levels.

Data Processing: The extracted information gets cleaned, formatted, and validated according to your requirements.

Data Input: The processed data automatically populates your target system, whether that’s a CRM, spreadsheet, or database.

Automation can handle various data types, from simple text and numbers to complex structured information like product catalogs or customer records.

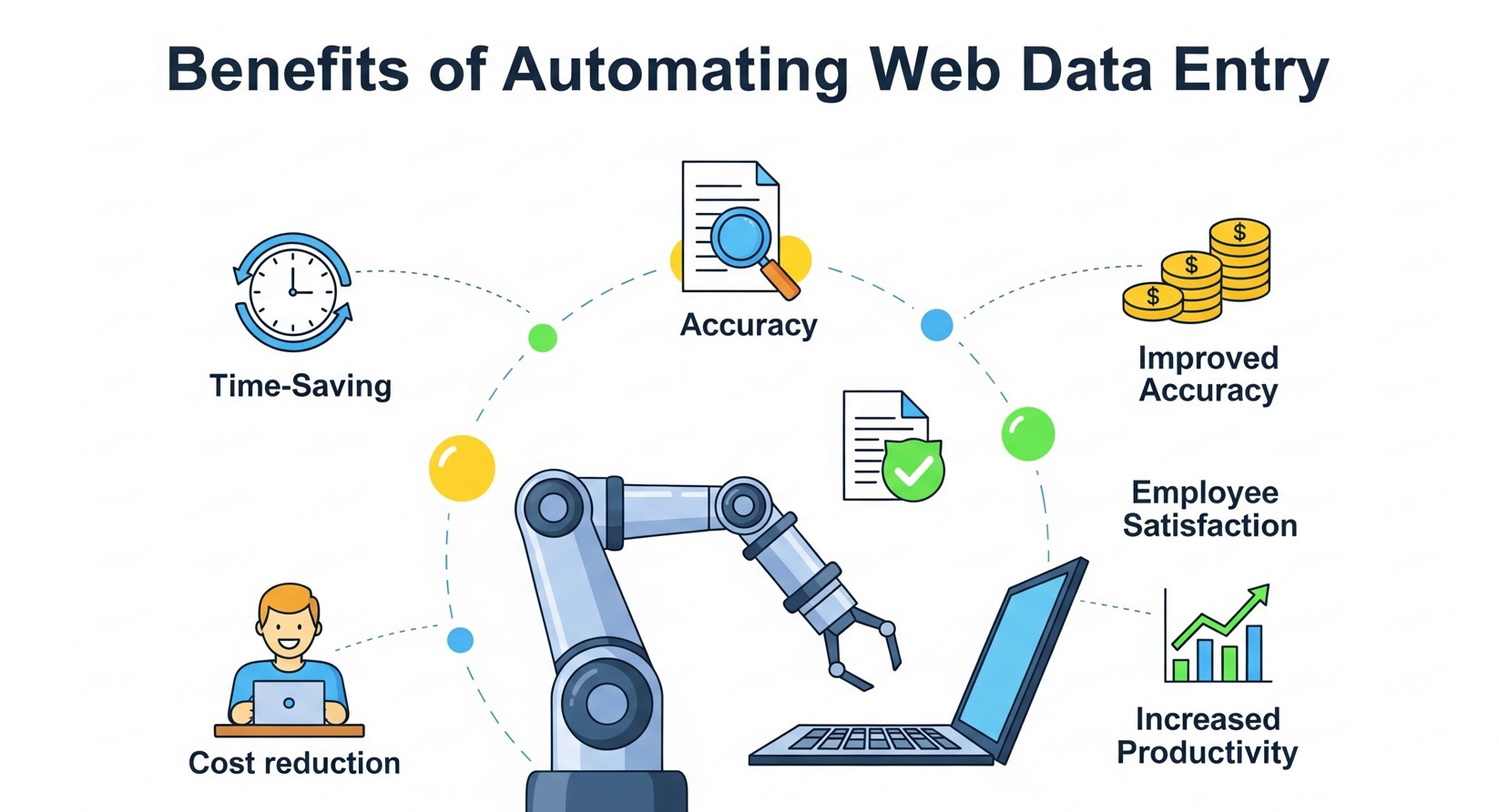

Benefits of Automating Web Data Entry

Increased Accuracy and Consistency

Human error is inevitable when manually entering data. Typos, missed fields, and formatting inconsistencies create problems downstream. Automated systems eliminate these issues by following consistent rules and validation checks.

Significant Time Savings

Tasks that once took hours can be completed in minutes. Instead of manually visiting dozens of websites to collect pricing information, automation tools can gather this data in seconds.

Scalability

Manual data entry doesn’t scale well. As your business grows, the volume of required data entry grows too. Automation handles increased workloads without additional staffing costs.

Real-Time Data Updates

Automated systems can run continuously, ensuring your data stays current. This is particularly valuable for time-sensitive information like stock prices, inventory levels, or competitor pricing.

Cost Reduction

While automation tools require initial investment, they quickly pay for themselves through reduced labor costs and improved efficiency. The ROI often becomes apparent within weeks of implementation.

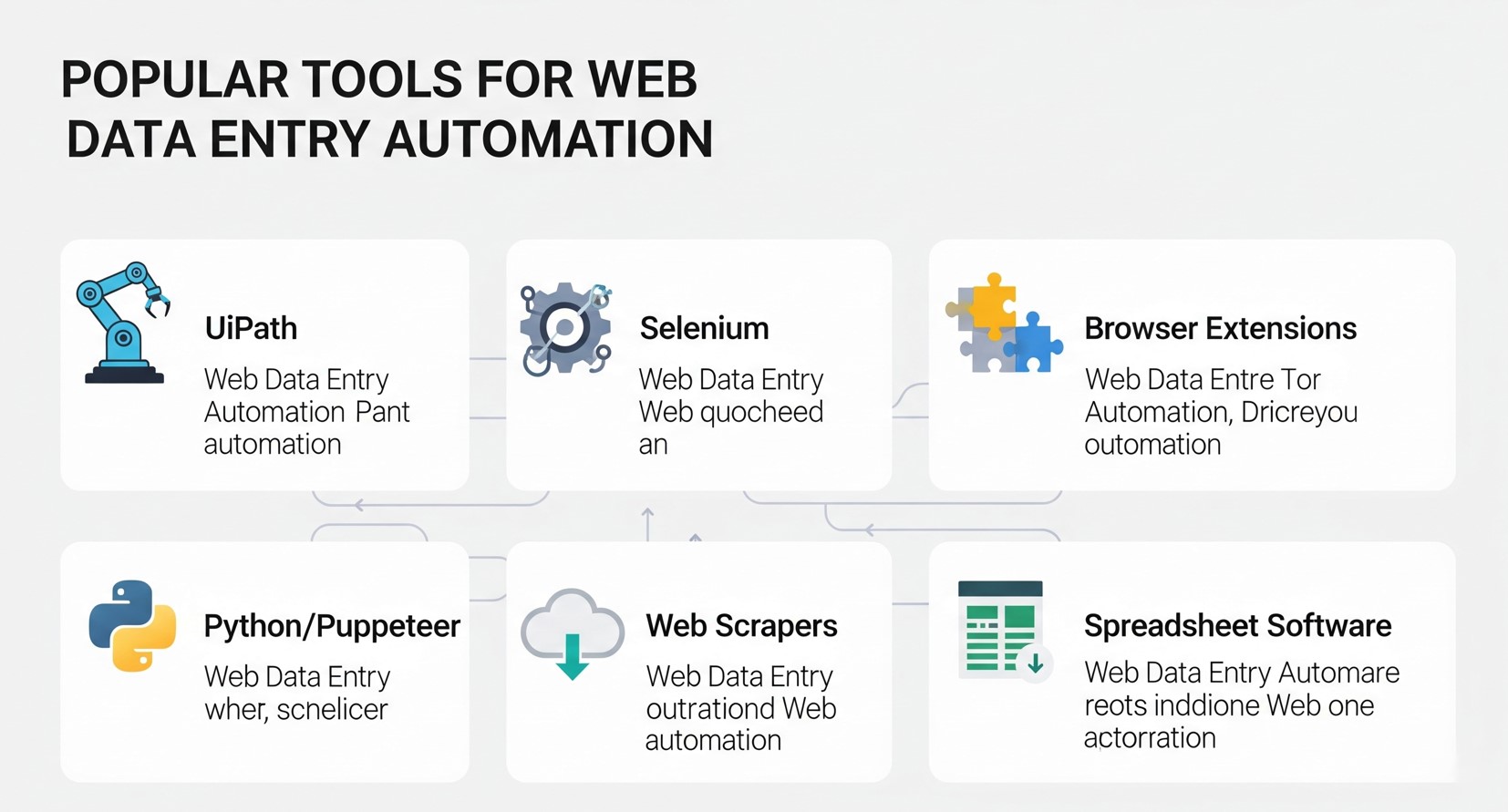

Popular Tools for Web Data Entry Automation

Web Scraping Tools

Beautiful Soup and Scrapy are Python-based libraries perfect for developers who want complete control over their data extraction process. These tools handle complex websites and can be customized for specific needs.

Octoparse offers a user-friendly visual interface for non-programmers. You can create scraping tasks by clicking on webpage elements, making it accessible to business users without coding experience.

ParseHub provides both free and paid plans for extracting data from dynamic websites that use JavaScript. It’s particularly useful for sites with interactive elements or infinite scroll features.

Browser Automation Tools

Selenium automates web browsers and can interact with websites exactly as a human would. It’s excellent for sites that require login credentials or complex navigation paths.

Puppeteer controls headless Chrome browsers and excels at scraping single-page applications and JavaScript-heavy websites.

No-Code Automation Platforms

Zapier connects different web applications and can automate simple data transfer tasks without programming knowledge. It’s ideal for moving data between popular business tools.

Microsoft Power Automate integrates well with Office 365 and other Microsoft products, making it suitable for businesses already using Microsoft ecosystems.

UiPath offers robotic process automation (RPA) capabilities that can handle complex workflows involving multiple applications and systems.

Step-by-Step Implementation Guide

Step 1: Define Your Data Requirements

Start by clearly identifying what information you need to collect and where it will be used. Create a detailed specification that includes:

- Source websites and specific pages

- Exact data fields required

- Desired output format

- Update frequency requirements

- Data validation rules

Step 2: Choose the Right Tool

Select automation tools based on your technical expertise, budget, and specific requirements. Consider factors like:

- Website complexity (static vs. dynamic content)

- Volume of data to be processed

- Required update frequency

- Integration needs with existing systems

- Available budget and resources

Step 3: Set Up Data Extraction

Configure your chosen tool to identify and extract the required information. This typically involves:

- Mapping webpage elements to data fields

- Setting up extraction rules and filters

- Implementing error handling for missing or changed content

- Testing extraction accuracy with sample data

Step 4: Implement Data Processing

Clean and format extracted data to meet your requirements:

- Remove unnecessary characters or formatting

- Standardize data formats (dates, phone numbers, etc.)

- Validate data against business rules

- Handle duplicate or conflicting information

Step 5: Automate Data Input

Set up automated transfer of processed data to your target systems:

- Configure database connections or API integrations

- Map processed data fields to destination fields

- Implement data validation checks

- Set up error logging and notification systems

Step 6: Monitor and Maintain

Establish ongoing monitoring to ensure continued reliability:

- Set up alerts for extraction failures

- Regularly review data quality metrics

- Update extraction rules when websites change

- Monitor system performance and optimization opportunities

Best Practices for Successful Automation

Respect Website Terms of Service

Always review and comply with website terms of service and robots.txt files. Some sites explicitly prohibit automated data collection, and violating these terms could result in legal issues or IP blocking.

Implement Rate Limiting

Avoid overwhelming target websites with rapid-fire requests. Implement delays between requests to mimic human browsing patterns and prevent your IP address from being blocked.

Handle Dynamic Content

Modern websites often load content dynamically using JavaScript. Ensure your automation tools can wait for content to load or use browser automation tools that render pages completely.

Plan for Website Changes

Websites frequently update their layouts and structure. Build flexibility into your automation scripts and establish monitoring to detect when changes break your data extraction.

Maintain Data Security

Implement appropriate security measures for collected data, especially if it contains sensitive information. Use secure connections, encrypt stored data, and limit access to authorized personnel only.

Start Small and Scale Gradually

Begin with simple, low-risk automation projects to gain experience and confidence. Once you’ve mastered basic implementations, gradually tackle more complex scenarios.

Common Challenges and Solutions

Challenge: Websites Blocking Automated Requests

Solution: Use rotating IP addresses, implement random delays between requests, and mimic human browsing patterns. Consider using residential proxy services for persistent blocking issues.

Challenge: Handling JavaScript-Heavy Websites

Solution: Use browser automation tools like Selenium or Puppeteer that can execute JavaScript and wait for dynamic content to load.

Challenge: Maintaining Accuracy When Websites Change

Solution: Implement robust error handling and monitoring systems. Set up alerts when extraction patterns fail and maintain backup extraction methods when possible.

Challenge: Processing Large Volumes of Data

Solution: Implement parallel processing and optimize your code for performance. Consider cloud-based solutions that can scale automatically based on workload.

Legal and Ethical Considerations

Web scraping exists in a legal gray area that varies by jurisdiction and specific use case. Generally, scraping publicly available information for legitimate business purposes is acceptable, but you should:

- Avoid scraping copyrighted content for commercial use

- Respect rate limits and don’t overload target servers

- Check for API alternatives before scraping

- Consult legal counsel for high-risk or large-scale projects

- Consider the ethical implications of your data collection

Measuring Success and ROI

Track key metrics to evaluate your automation success:

Time Savings: Measure hours saved compared to manual processes

Accuracy Improvements: Compare error rates before and after automation

Cost Reduction: Calculate labor cost savings minus tool and maintenance costs

Data Freshness: Monitor how current your automated data remains compared to manual updates

Process Reliability: Track uptime and successful completion rates

Taking Your First Steps Toward Automation

Web data entry automation transforms how businesses handle repetitive data collection tasks. The technology has matured to the point where both technical and non-technical users can implement effective solutions.

Start by identifying your most time-consuming manual data entry tasks. Choose simple, low-risk projects for your initial automation efforts. As you gain experience and confidence, you can tackle more complex scenarios and achieve greater efficiency gains.

Remember that successful automation requires ongoing attention and maintenance. Websites change, requirements evolve, and systems need updates. But the investment in time and resources pays dividends through increased productivity and improved data quality.

The question isn’t whether you should automate web data entry—it’s which processes to automate first and how quickly you can get started.